IEEE WCCI 2022 is the world’s largest technical event on computational intelligence, featuring the three flagship conferences of the IEEE Computational Intelligence Society (CIS) under one roof: the 2022 International Joint Conference on Neural Networks (IJCNN 2022), the 2022 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE 2022), and the 2022 IEEE Congress on Evolutionary Computation (IEEE CEC 2022). The event was held online and in Padua, Italy.

ULTRACEPT researchers attended the event to share their research:

Shaping the Ultra-Selectivity of a Looming Detection Neural Network from Non-linear Correlation of Radial Motion

University of Lincoln researcher Mu Hua has finished his postgraduate program last July and now is an honorary researcher working on ULTRACEPT’s work package 1. He remotely attended the IEEE World Congress on Computational Intelligence 2022 and orally presented his latest work on lobula plate/lobula columnar type 2(LPLC2) neuropile discovered within neural pathway of fruit flies Drosophila.

Mu Hua presented his recent work on modelling the LPLC2 in his paper titled ‘Shaping the Ultra-Selectivity of a Looming Detection Neural Network from Non-linear Correlation of Radial Motion’.

H. Luan, M. Hua, J. Peng, S. Yue, S. Chen and Q. Fu, “Accelerating Motion Perception Model Mimics the Visual Neuronal Ensemble of Crab,” 2022 International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 2022, pp. 1-8, https://10.1109/IJCNN55064.2022.9892540.

With a pre-recorded video, he orally explained how the proposed LPLC2 neural network realises its ultra-selectivity for stimuli initial location of the whole receptive field, object surface brightness and its preference for approaching motion patterns through high-level non-linear combination of motion clues, demonstrating potential for distinguishing near miss so that true collision can be recognized correctly and efficiently.

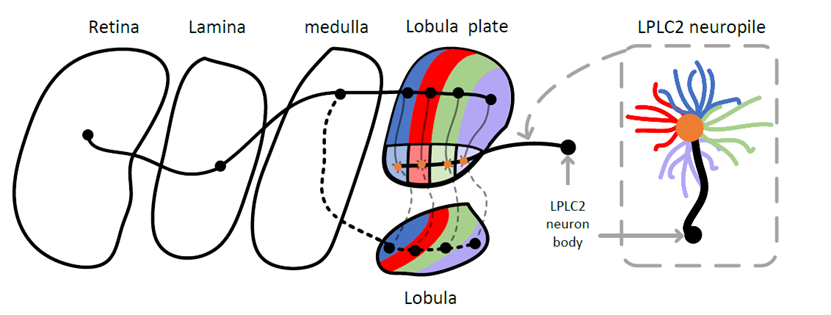

Figure 1 shows the schematic of visual system of Drosophila. ON channel is represented by solid line while OFF channel by dashed line. Blue, red, green and purple areas show different type DSNs. More specifically, the blue one represents neurons interesting in upwards motion whilst the purple one prefers downwards motion; the red line and green shows preference to leftwards and rightwards movement respectively. The dot-headed line shows pathway of visual signals accepted by photoreceptors in retina layer, which are firstly dealt with in lamina, and subsequently separated in medulla into parallel ON/OFF channel with polarised selectivity. After that, signals in ON are passed to various types of DSNs (T4s) in lobula plate layer channel for directional motion calculation.

These visual signals estimated by T4 interneurons are then combined with T5 neurons in OFF channel, and further filtered through to lobula plate tangential cells (LPTCs, represented by orange dots within slightly transparent areas). Note number of lines shall not be the actual number of neurons within Drosophila visual system.

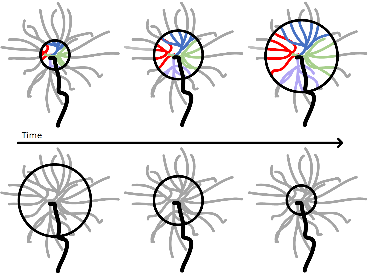

Figure 2 illustrates the directionally-selective neurons LPTCs being activated by edge expanding (top) and remaining silent against recession (bottom). The black circle represents one dark looming motion pattern. As it expands, four sorts of T4/T5 interneurons in four colours sense motions along one of the four cardinal directions. Directional information is then estimated within the T4 or T5 pathway. The ON channel motion estimation in T4 and OFF channel motion in T5 are then summarised by their post-synaptic structure LPTC neurons. The particular placement of LPTC neurons as shown is considered to impose impacts on the following non-linear combining calculation of LPLC2 neurons.

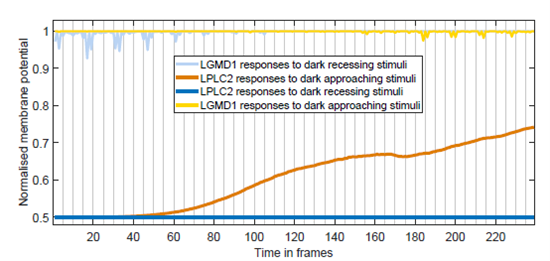

Figure 3 shows snapshots of one of the experimental stimuli, where a square lays on a complex background. From top to bottom, the motion pattern is approaching and reversely generates receding. Curves on the right show the output of proposed model and the classic LGMD1 neural network chosen for comparison. The original curve demonstrates that our proposed neural network is only activated by approaching motion pattern, which fits well biological findings.

Abstract

In this paper, a numerical neural network inspired by the lobula plate/lobula columnar type II (LPLC2), the ultraselective looming sensitive neurons identified within the visual system of Drosophila, is proposed utilising non-linear computation. This method aims to be one of the explorations toward solving the collision perception problem resulting from radial motion. Taking inspiration from the distinctive structure and placement of directionally selective neurons (DSNs) named T4/T5 interneurons and their post-synaptic neurons, the motion opponency along four cardinal directions is computed in a non-linear way and subsequently mapped into four quadrants. More precisely, local motion excites adjacent neurons ahead of the ongoing motion, whilst transferring inhibitory signals to presently-excited neurons with slight temporal delay. From comparative experimental results collected, the main contribution is established by sculpting the ultra-selective features of generating a vast majority of responses to dark centroid-emanated centrifugal motion patterns whilst remaining nearly silent to those starting from other quadrants of the receptive field (RF). The proposed method also distinguishes relatively dark approaching objects against the brighter backgrounds and light ones against dark backgrounds via exploiting ON/OFF parallel channels, which well fits the physiological findings. Accordingly, the proposed neural network consolidates the theory of non-linear computation in Drosophila’s visual system, a prominent paradigm for studying biological motion perception. This research also demonstrates the potential of being fused with attention mechanisms towards the utility in devices such as unmanned aerial vehicles (UAVs), protecting them from unexpected and imminent collision by calculating a safer flying pathway.

A Bio-inspired Dark Adaptation Framework for Low-light Image Enhancement

Fang Lei is a PhD Scholar at the University of Lincoln. Fang presented her poster promoting her research ‘A Bio-inspired Dark Adaptation Framework for Low-light Image Enhancement’.

F. Lei, “A Bio-inspired Dark Adaptation Framework for Low-light Image Enhancement,” 2022 International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 2022, pp. 1-8, https://10.1109/IJCNN55064.2022.9892877

Abstract

In low light conditions, image enhancement is critical for vision-based artificial systems since details of objects in dark regions are buried. Moreover, enhancing the low-light image without introducing too many irrelevant artifacts is important for visual tasks like motion detection. However, conventional methods always have the risk of “bad” enhancement. Nocturnal insects show remarkable visual abilities at night time, and their adaptations in light responses provide inspiration for low-light image enhancement. In this paper, we aim to adopt the neural mechanism of dark adaptation for adaptively raising intensities whilst preserving the naturalness. We propose a framework for enhancing low-light images by implementing the dark adaptation operation with proper adaptation parameters in R, G and B channels separately. Specifically, the dark adaptation in this paper consists of a series of canonical neural computations, including the power law adaptation, divisive normalization and adaptive rescaling operations. Experiments show that the proposed bioinspired dark adaptation framework is more efficient and can better preserve the naturalness of the image compared to existing methods.

Model

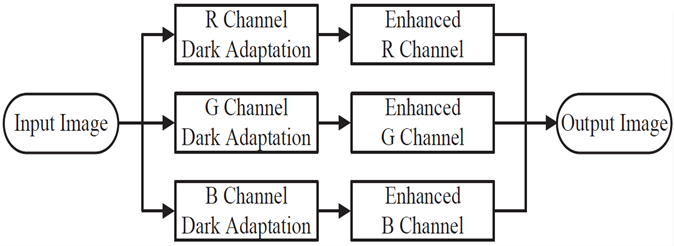

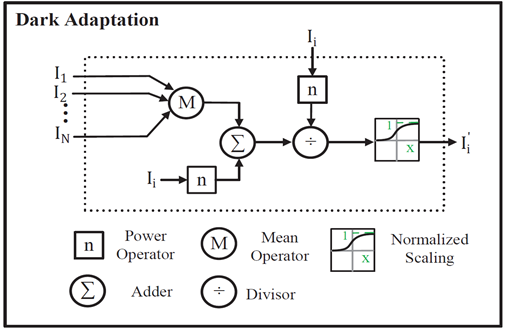

The proposed bio-inspired dark adaptation framework is shown in Fig.1. The key idea of the dark adaptation is to adaptively raise the intensities of dark pixels by a series of canonical neural computations (see Fig.2).

Results

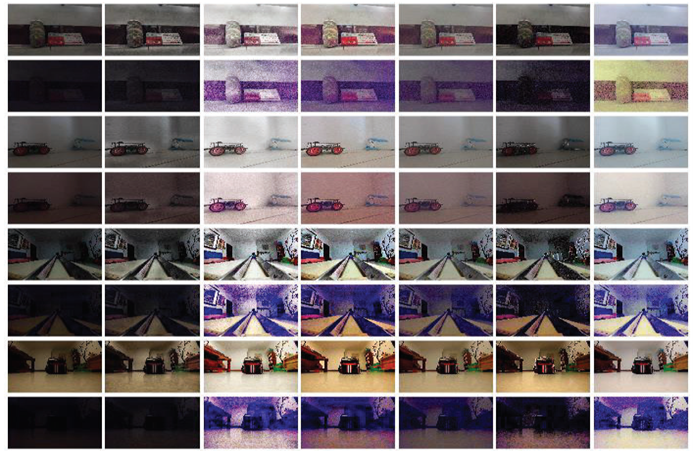

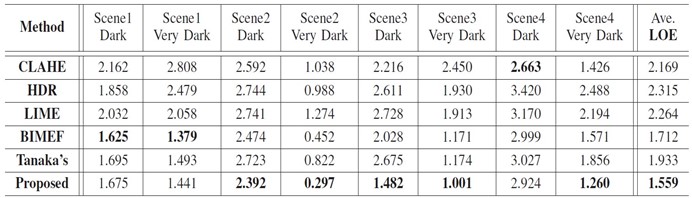

We compare the proposed method with existing low light image enhancement methods, including comparisons of visual performance, lightness order error (LOE), and average running time. The experimental results are shown below.